How computers work? - Part 2: Abstract computers

Part 2 of the series "How computers work?"

In the previous part we've talked about core concepts that were mentioned to be the foundation of our journey to learn about the inner working of computers - information, data, encoding/decoding and computation. So now we are going to take the next step and try to utilize that framework to build an abstract, well-defined mechanism that would enable us to perform those computations and replace the "hand-wavy" and declarational concept of mapping information with something more practical.

Building blocks

First we would like to take a moment to think about what we'll actually need from our imaginary machine to perform the task(s) it's supposed to.

As a starting point we would need some structure - information can be basically anything in any form and similarly for the mapping that transforms them into what we want or some intermediate state along the way to our goal.

For information we already know that when given a well-defined structure we call that data. Let us also assume that the data comes in "blocks" - that is, the data we have will be composed from some "atomic" data pieces and that all of the data we operate on needs to be encoded in that form. A visual example of that:

So in the above the smallest piece of data is a symbol, which is enclosed in a square. We cannot have half of a star or 3/4 moons - each symbol is indivisible. But of course we can have 2 green stars as that doesn't violate the rule.

Considering that each block has a constant and finite size we also require that the number of possible values/states that each block can take is also finite and constant. Thus the values are part of a finite set of discrete values - in a similar way we have our alphabet for language that makes up words.

As for the mapping we need to be able to define a mapping for each possible piece of data which will be the domain of the mapping. Also as we assume our machine only understands and operates on a single set of possible values of the data blocks, thus we would want the results to be part of that set of possible values - otherwise the mappings cannot be well-defined and finite. So the domain and codomain of the mapping is a subset of the set of all possible values in the data.

Also a basic requirement would be determinism - for a given set of input data we always want to get the same result each computation if the mappings remain the same between them. Otherwise we end up with something that just generates random numbers, noise in the long run.

Even though the amount of data can be infite the domain and mappings need to be finite. The reason for that is that the machine would require an infinite amount to time to perform any operation - basically getting stuck for eternity when "turned on". In case of the domain finite but an infite amount of data it will simply work for an infite amount of time, but actually do computations during that time.

To remove the remaining "infiteness" and let it actually do computations we need to also restrict the amount of data that is processed one at a time. As with an infite amount processed at once we run into the same problem as before - it takes an infite amount of time to get the result. This also implies and restricts another thing - there should be order in how the data is processed. As we cannot process it all at once we need for it to be ordered in some fashion for the result to be deterministic (non-random) each time the computation is executed for the same input data.

As we cannot compute all of the data at once we cannot have mappings that work with "context" which is the relation between multiple pieces of the data. This is akin to trying to understand a word where we can only look at each letter independantly - to compute what it means we either need to comprehend it whole at once or use our memory to remember the previous letter(s) and re-use that when we go through the whole word.

Considering the first option is a no-go, we will additionally need some kind of memory where we can store a value from a finite set that will let us track "where we are" along the computation, with some intitial value to know where to start. An additional requirement to the mapping now is to also update the state as part of producing the result. On top of that we should be able to choose which piece of data we process and be able to write the partial results somewhere to later construct the final result of the mapping from these pieces.

We also need a way for it to "know" when the mapping is finished. We can achieve this by introducing an additional state value that should be handled by the mapping that will stop it from doing anything when encountered as the input state.

So to sum it up we have the following boundaries for our abstract machine:

- We operate on data - well-defined and structured information

- The data is divided into indivisble blocks where each block can only take a state/value from a finite, discrete set of values

- The computation is deterministic - for the same mapping and input data we always get the same result

- The mapping(s) domain and codomain are subsets or equal to the set of all possible values mentioned above

- Only a finite amount of data can be mapped at a single time

- The data has order which determines the order in which it will be mapped to the result

- Some sort of memory for the machine as means of holding context between the computed pieces of data

- A way to choose the piece of data being processed and the ability to write the result of that processing somewhere

The Turing machine

We will now look at the probably most well known realization of the ideas above in the form of an abstract computing machine (automata) called the Turing Machine. The name comes from Alan Turing, known as the godfather of computer theory and a genius mathematician that gave us the foundations for the creation of modern computers.

The idea is pretty simple, altough very powerful as we will explain later on. Basically what we will have is:

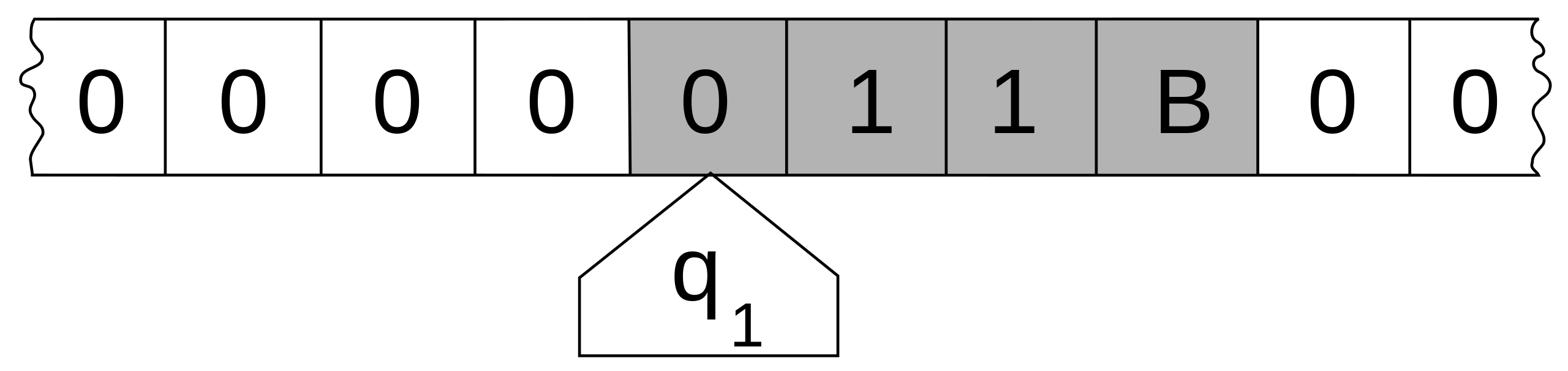

- A tape that is an ordered list of blocks of data (discrete cells) where each block/cell holds a symbol from a finite set of symbols (the alphabet) which are read/processed one at a time

- A head which manipulates a single symbol it points (read/write) and can move on the tape left or right by one position

- One or an infite amount of state registers that hold the state of the machine

- Finite table of instructions which consist of three steps that compute the result or update the machine itself

The steps mentioned in the last point are:

- Erase or write over the current symbol under the head

- Move the head by one position or don't move it at all

- Update the machine state or leave it as it is

Each part of the machine is finite, discrete and distinguishable and considering it has a possibly infite amount of table the available storage is infite.

The tape can be visualized like this:

A simple table with a two element alphabet {A, B} and two possible states {S1, S2} might look like this:

| Symbol | S1 - Write | S1 - Move | S1 - Update state | S2 - Write | S2 - Move | S2 - Update state |

|---|---|---|---|---|---|---|

| A | A | L | S1 | B | R | S1 |

| B | A | R | S2 | B | R | HALT |

The table gives us a finite set of well-defined instructions what to do for each possible symbol and state. So for an example piece of data like BABA and assuming the initial state is S1 starting from the left we get:

('B'ABA, B, S1) -> (A'A'BA, A, S2) -> (AB'B'A, B, S1) -> (ABAA, A, S2)

As we can see we've arrived at the end of the tape but we should move right - we cannot proceed further.

Simple but powerful

Altough the above model created by Alan Turing may seem very simple or even primitive it was proven that it contains all the necessary components to describe any possible algorithm. There is a property that can be ascribed to a computing machine, programming language or any other system that manipulates data with some set of rules which is based on that - Turing completeness.

What it means is that e.g. a programming language can simulate any kind of Turing machine and thus manipulate data in the same way it can. And as we know the Turing machine can be used to describe any kind of algorithm - thus that programming language can be used to implement any kind of algorithm by extension.

I've cut a few corners when describing the Turing machine, especially the theoretical underpinnings related to it and computation as a whole (e.g. halting problem), but going into detail about the subject would probably require a plethora of articles like this and that isn't the goal of the article or series as a whole.

What should be understood and internalized from this part is the idea of doing computation by executing atomic, well-defined steps and tracking the current state of where we are. This is important as this mode of "doing stuff" is the basis of any practical computer, even though sometimes many steps can be done at once (parallelism) or more generally out-of-order (concurrency).

So now we should have a good idea what our computer should be able to do and how it should do it so that we can actually compute anything with it (actually as mentioned before compute anything). But now the question arises how do we implement this idea in the real world?

Well to do that we will need to exploit the rules of physics and somehow find a way to use them in a way that simulates, at least to some acceptable degree, the abstract concepts that we've studied up until now. So next I would like to move towards the mission of finding physical phenomena that we can use to do that with probably some additional information about encoding data as numbers and the binary numeral system.

So please stay tuned and thank you for reading!